Optimizing Golang Applications for Kubernetes: Best Practices for Reducing Server Load

We’re Earthly. We make building software simpler and therefore faster using containerization. If you’re looking to optimize your Go applications builds, Earthly can be a great addition to your toolkit. Check it out.

Optimizing server load is crucial for ensuring efficient performance and scalability of Golang applications running on Kubernetes. As organizations increasingly adopt containerization and Kubernetes for deploying and managing their applications, it becomes imperative to implement best practices for reducing server load to achieve optimal resource utilization, cost-efficiency, and an improved user experience.

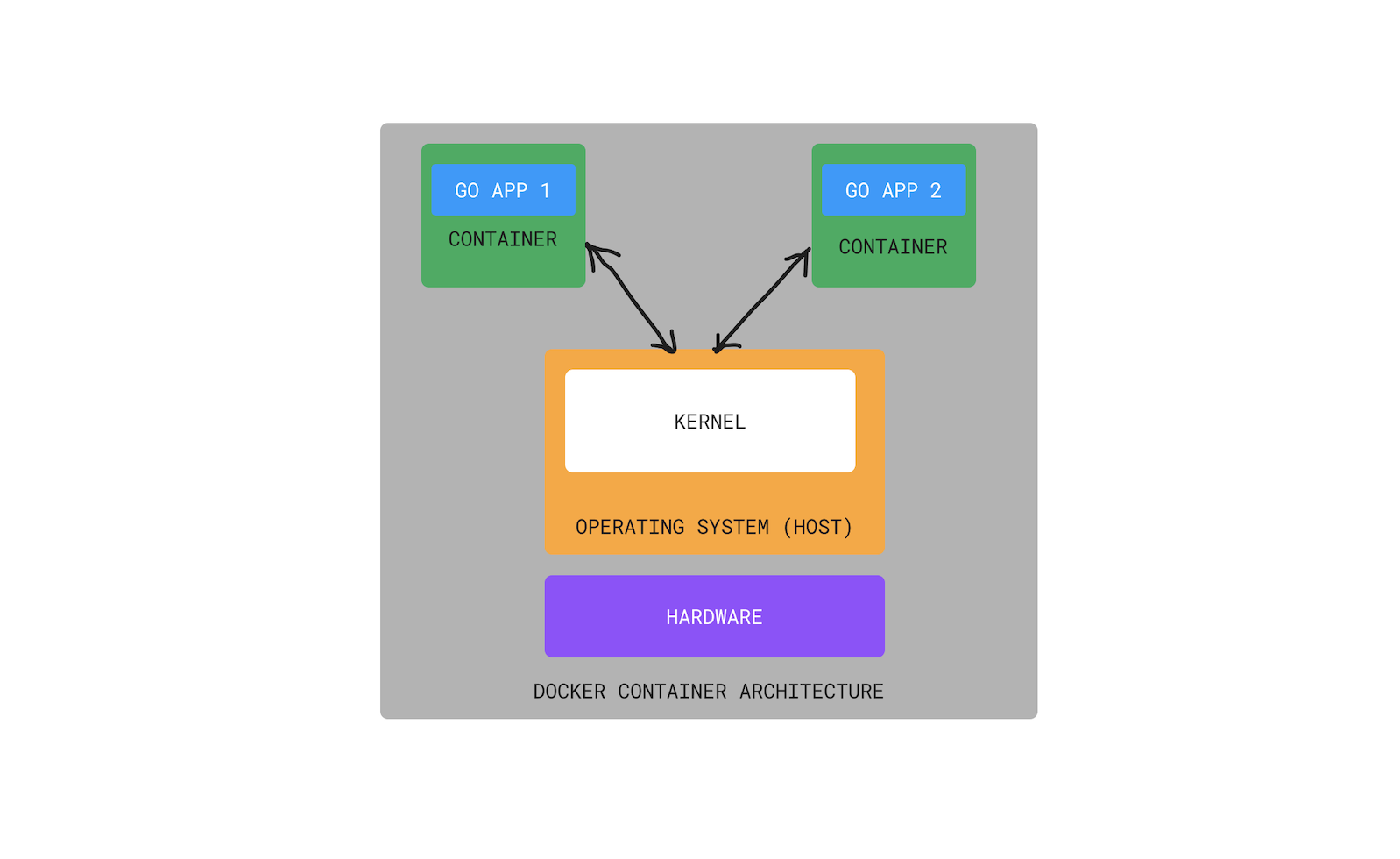

Multiple containers running Golang applications can be set up in a Kubernetes cluster and deployed on various nodes. Each container can use system resources like CPU, memory, and storage. If these resources are not effectively managed, it may lead to increased server load, decreased performance, and higher expenditures. Therefore, Golang application optimization for Kubernetes is crucial to accomplishing effective resource utilization, lowering server load, and guaranteeing smooth application functioning in a production environment.

In this article, we’ll examine the best methods for enhancing your Golang application for Kubernetes, with an emphasis on reducing server load. We’ll talk about methods including monitoring with readiness and liveness probes, utilizing auto-scaling, and optimizing resource usage. You may improve the performance and scalability of your Golang application running on Kubernetes by putting these best practices into effect, which will improve the user experience, save costs, and increase operational efficiency.

All the code examples for this article can be found on GitHub.

Prerequisites

To follow along with this tutorial, you need a mid-level knowledge of Go and Kubernetes.

In addition, you need to have Go, Docker, and Kubernetes (as well as other tools that will allow you to work with Kubernetes like Kubectl) installed and configured on your system.

You can verify that you’ve installed Go by opening a terminal or command prompt and typing the following command:

go versionIt should return something similar to this:

go version go1.19.3 darwin/amd64Then, verify Docker is installed with:

docker --versionWhich will output something like this:

Docker version 20.10.22, build 3a2c30bAlso, verify Kubernetes is installed on your PC with:

kubectl version --clientThe output should be something similar to:

Client Version: version.Info{Major:"1", Minor:"25", \

GitVersion:"v1.25.4", \

GitCommit:"872a965c6c6526caa949f0c6ac028ef7aff3fb78", \

GitTreeState:"clean", BuildDate:"2022-11-09T13:36:36Z", \

GoVersion:"go1.19.3", Compiler:"gc", Platform:"darwin/amd64"}

Kustomize Version: v4.5.7The exact output may vary depending on the version of Kubectl installed on your PC. However, the output should include the version information of the Kubectl client, which includes the major and minor version numbers, the Git version, the Git commit, and the build date.

Understanding Golang Applications and Kubernetes

Google developed Golang, sometimes known as “Go”, with the objectives of being efficient, concurrent, and scalable. It is effective for creating high-performance software, particularly for applications that require concurrent processing, like web servers, networked software, and distributed systems.

The deployment, scaling, and management of containerized applications are all automated by the open-source container orchestration platform Kubernetes. By abstracting away the underlying infrastructure’s specifics and facilitating effective resource usage, scalability, and fault tolerance, it offers a flexible and scalable framework for deploying and managing containerized applications across a cluster of nodes.

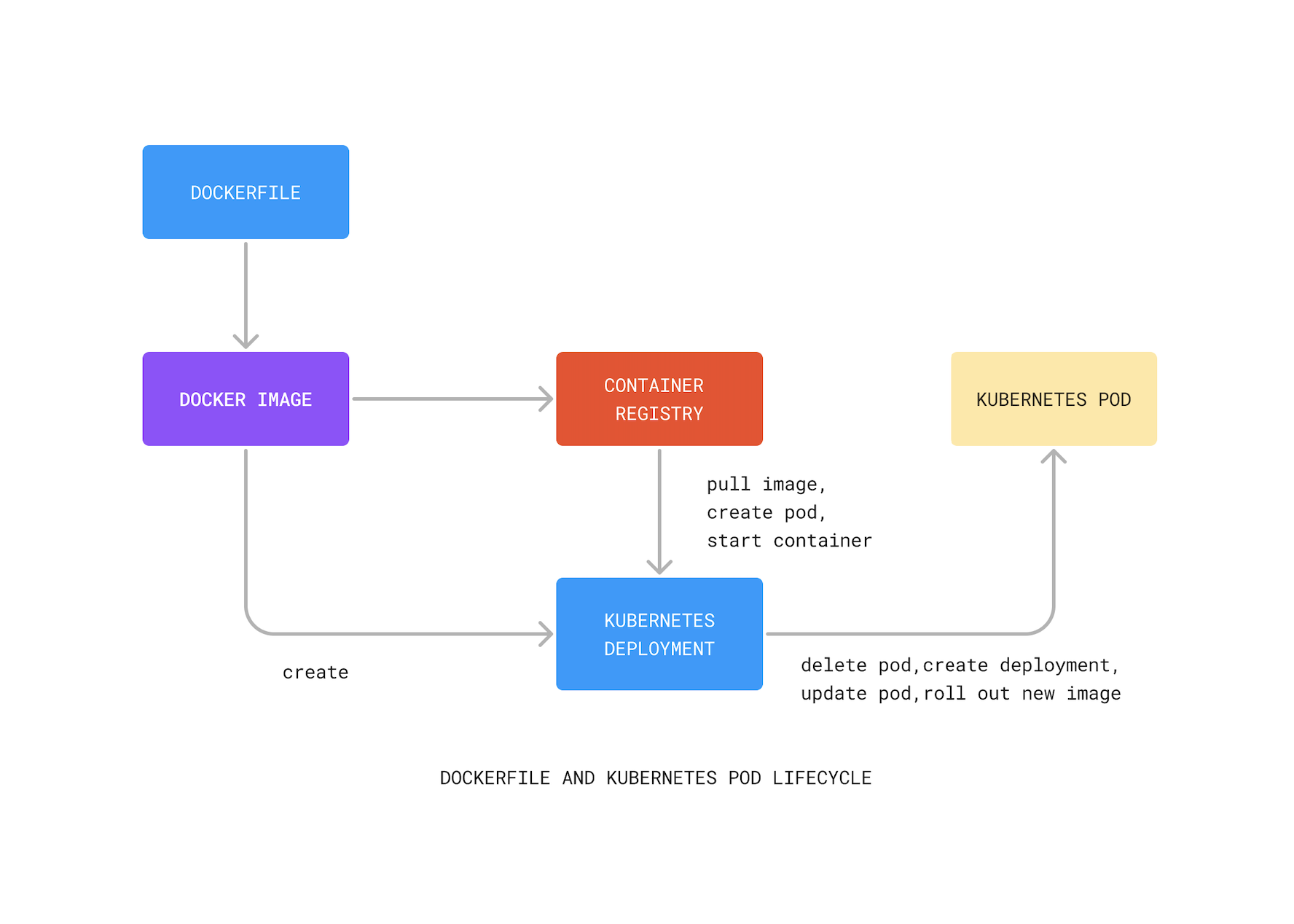

Golang applications are generally packaged as Docker containers when they are deployed on Kubernetes. These segregated, lightweight environments isolate the program and all of its dependencies. These containers may be simply deployed and managed by Kubernetes, enabling effective resource use and automatic scaling according to the requirements of the application.

Golang applications running on Kubernetes can take advantage of several features that improve performance, scalability, and resilience. For instance, Kubernetes enables the deployment of numerous instances of the same application across various cluster nodes to disperse the load and improve availability. The deployment, upkeep, and scalability of Golang programs can also be enhanced by load balancing, service discovery, and rolling update tools in Kubernetes.

To ensure resource efficiency and prevent resource contention or overutilization, Your Golang application can also benefit from Kubernetes’ built-in resource management capabilities, including CPU and memory restrictions, resource quotas, and resource profiling. Additionally, Kubernetes offers capabilities for logging and monitoring that may assess the functionality and general health of Golang programs, facilitating efficient problem-solving and debugging.

Concurrency, efficiency, simplicity, community, and alignment with cloud-native principles make Golang a popular choice for developing Kubernetes-based applications, enabling developers to create high-performance, scalable, and resilient applications that can be easily deployed and managed in a containerized environment.

Overall, the combination of Golang and Kubernetes provides a powerful platform for building and deploying high-performance, scalable, and resilient applications. Optimizing Golang applications for Kubernetes involves leveraging the features provided by Kubernetes for efficient resource utilization, scaling, request, response, and error handling, to ensure optimal performance and reliability in a containerized environment.

Best Practices for Optimizing Golang Applications for Kubernetes

Golang applications running on Kubernetes need to be optimized to ensure efficient resource utilization, scalability, and reliability in a containerized environment. Kubernetes provides features such as horizontal scaling, load balancing, and resource management, which can be leveraged to optimize Golang applications for better performance and resilience. Optimizing Golang applications for Kubernetes involves leveraging Kubernetes features, such as resource limits, service discovery, and monitoring, to ensure optimal performance and reliability in a containerized environment and optimizing Go code such as using Go garbage collection and connection pooling. This optimization helps ensure that Golang applications running on Kubernetes can efficiently handle high loads, scale dynamically, and provide reliable services to users.

Some of these optimization techniques include:

Use Minimal and Efficient Container Base Images:

Your containers’ performance and resource utilization may be considerably impacted by the base images you use for your Golang application. The use of minimal base images created especially for running containerized apps is crucial. One of the most well-liked choices is the official Golang base image offered by Docker Hub, which is based on the official Golang distribution and offers a basic runtime environment. You might also think about adopting lighter options, such as the Golang image built on Alpine Linux, which has a smaller footprint than the typical Golang image. Alpine is a lightweight Linux distribution that is commonly used in Docker images to minimize image size and reduce the attack surface.

The following is an example of Docker code that uses the official Golang base image:

# Use the official Golang base image FROM golang:1.16 # Set working directory WORKDIR /app # Copy and build the Go application COPY . . RUN go build -o myapp # Run the application CMD ["./myapp"]The Docker command below uses a specific version of the official Go base image that is based on the Alpine Linux:

FROM golang:1.16-alpineThe FROM docker statement specifies the base image to be used, which is golang:1.16-alpine in this case.

Optimize Resource Allocations: Efficiently allocating resources to your Golang containers is crucial for reducing server load and optimizing performance. It is essential for improving application performance, avoiding overprovisioning, and enabling scalability. If resources (such as CPU and memory) are not allocated efficiently, some containers may not have enough resources, which can lead to poor performance. By optimizing resource allocations, you can ensure that all containers have the resources they need to run smoothly, which can lead to improved performance. Kubernetes provides mechanisms to set resource limits and requests for containers, which can help prevent over-allocation or under-allocation of resources. It is essential to carefully configure these settings based on the resource requirements of your Golang application.

To demonstrate optimizing resource allocations, we will create a YAML file where we can set resource limits and requests for our container:

#..... # ..... containers: - name: my-golang-container image: my-golang-image resources: limits: cpu: 500m memory: 512Mi requests: cpu: 200m memory: 256MiIn the YAML file above, the container is defined with specific resource limits and requests for CPU and memory.

resourcesis a section that defines the CPU, memory resource limits, and requests for the container.limitsspecify the maximum amount of resources that the container is allowed to consume. In this case, it sets a limit of 500 milliCPU (0.5 CPU cores) and 512 megabytes of memory.

requestsspecify the minimum amount of resources that the container requires to run. In this case, it requests 200 milliCPU (0.2 CPU cores) and 256 megabytes of memory.In Kubernetes, CPU resources are allocated to containers to ensure that each container has access to the necessary computing power. By setting CPU limits and requests, you can prevent containers from consuming too much CPU and causing other containers to suffer from resource starvation. This efficient allocation of CPU resources helps ensure that your application runs smoothly and that the server load remains stable.

Memory, on the other hand, is a crucial resource for applications that store and manipulate data. In Kubernetes, you can allocate memory resources to containers to ensure that they have enough space to run efficiently. By setting memory limits and requests, you can prevent containers from consuming too much memory and causing the system to run out of memory, which can result in crashes and other issues. Efficient allocation of memory resources helps ensure that your applications are performing optimally and that the server load is kept under control

By carefully when setting CPU and memory limits and requests, you need to ensure that your containers have access to the resources they need to run efficiently without overloading the server. This can help prevent downtime, crashes, and other issues that can negatively impact your business.

In determining the amount of CPU and memory to use, the requirements of the application running inside the container will also affect the size of the container. For example, if the application requires a large amount of memory to process data, you may need to increase the memory limits of the container accordingly. After deploying the container, you will need to monitor its resource usage and optimize its size if necessary. You can use tools like Kubernetes’ Horizontal Pod Autoscaler to automatically adjust the number of replicas based on resource utilization.

The complete Kubernetes deployment configuration for setting resource limits and requests looks like this:

apiVersion: apps/v1 kind: Deployment metadata: name: my-golang-app spec: replicas: 3 selector: matchLabels: app: my-golang-app template: metadata: labels: app: my-golang-app spec: containers: - name: my-golang-container image: my-golang-image resources: limits: cpu: 500m memory: 512Mi requests: cpu: 200m memory: 256MiOptimize Garbage Collection: Garbage collection (GC) is a critical aspect of memory management in Golang applications. Kubernetes provides options for configuring garbage collection settings, such as the GOGC (Go Garbage Collector) environment variable, which controls the percentage of heap that triggers a garbage collection cycle. By optimizing garbage collection settings, you can reduce memory usage and improve the overall performance of your Golang containers.

When running a Golang app in a Kubernetes cluster, a poorly optimized GC can cause several issues that impact the server’s performance. The most common issue is memory leaks; If the GC algorithm is not efficient, it may not be able to release memory that is no longer being used by the application. This leads to memory leaks, which can cause the app to consume more memory than necessary which leads to worse performance and higher server load.

The following code optimizes garbage collection settings in Golang. In the code, we need to import two packages: “os” and “fmt”. The “fmt” package is used for formatting input and output, the “os” package provides a platform-independent interface to operating system functionality :

package main import ( "fmt" "os" )Define the main function of the Go program:

func main() {We decide to get the current value of the “GOGC” variable to the console, using the

fmt.Println()function. The “GOGC” variable is a setting that controls the garbage collector in Go.// Get current GOGC value gogc := os.Getenv("GOGC") fmt.Println("Current GOGC value:", gogc);We can now go ahead to set the “GOGC” variable to a value of 50. This changes the behavior of the Go garbage collector and may improve the performance of the program:

// Set GOGC value to 50 os.Setenv("GOGC", "50") // Run your Golang application // ... }The whole code looks as shown below:

package main import ( "fmt" "os" ) func main() { // Get current GOGC value gogc := os.Getenv("GOGC") fmt.Println("Current GOGC value:", gogc); // Set GOGC value to 50 os.Setenv("GOGC", "50") // Run your Golang application // ... }The

os.Setenv("GOGC", "50")line sets the value of GOGC to 50. The GOGC parameter determines the percentage of heap that triggers garbage collection in Go. A lower value like 50 means garbage collection will be triggered more frequently, while a higher value like 100 means garbage collection will be triggered less frequently. Setting GOGC to 50 in this case is likely an attempt to increase the frequency of garbage collection.Frequent garbage collection can help ensure that a program is using only the memory it needs, freeing up resources for other processes running on the same server. It is important to monitor the program’s performance and adjust the GOGC value as necessary to achieve the right balance between memory usage and garbage collection overhead.

Use Connection Pooling: Connection pooling is a technique that allows you to reuse database connections instead of establishing a new connection for each request. This can greatly reduce the overhead of establishing and tearing down connections, resulting in improved performance and reduced server load.

In a Kubernetes environment, connection pooling can help reduce the server load by minimizing the number of connections made to the database. When an application running in Kubernetes establishes a connection to a database, it uses a certain amount of resources, such as CPU, memory, and network bandwidth. These resources are finite and can become exhausted if too many connections are established.

By using connection pooling, our Golang application can minimize the number of connections made to the database, reducing the load on the server. Connection pooling achieves this by reusing existing connections, rather than establishing new ones for each request. This reduces the overhead of establishing and tearing down connections and also minimizes the number of resources used by the application to make requests to the database.

Golang provides libraries like “database/sql” that support connection pooling out of the box.

Here is how to implement connection pooling in Golang:

We start by defining package main statement to identify this Go code file as part of the main package. The main package is required for creating an executable program:

package mainWe also have to import a list of external packages that this Go program will require. We import the standard library packages database/sql, fmt, and log, as well as a third-party package github.com/go-sql-driver/mysql, which provides a MySQL driver for the database/sql package:

import ( "database/sql" "fmt" "log" "time" _ "github.com/go-sql-driver/mysql" )We create a new database connection pool using the

sql.Open()function, which takes two arguments: the name of the database driver to use (mysql) and a connection string with the user credentials, hostname, port, and database name. If the connection to the database fails, the program logs the error message and exits using thelog.Fatal()method. Otherwise, the program defers the closing of the database connection until the end of the function:// Create a database connection pool db, err := sql.Open("mysql", "user:password@tcp(db-hostname:3306)/mydb") if err != nil { log.Fatal("Failed to connect to database:", err) } defer db.Close()We have a connection pool but we need to configure the database connection pool settings using methods on the db object. The

SetMaxOpenConns()method sets the maximum number of open connections to the database (10 in this example), whileSetMaxIdleConns()method sets the maximum number of idle connections to keep in the pool (5). Finally,SetConnMaxLifetime()method sets the maximum lifetime of a connection (5 minutes in this example):// Set connection pool settings db.SetMaxOpenConns(10) db.SetMaxIdleConns(5) db.SetConnMaxLifetime(time.Minute * 5) // Use the connection pool to perform database operations // ... }The whole code looks as shown below:

package main import ( "database/sql" "fmt" "log" "time" _ "github.com/go-sql-driver/mysql" ) func main() { // Create a database connection pool db, err := sql.Open("mysql", "user:password@tcp(db-hostname:3306)/mydb") if err != nil { log.Fatal("Failed to connect to database:", err) } defer db.Close() // Set connection pool settings db.SetMaxOpenConns(10) db.SetMaxIdleConns(5) db.SetConnMaxLifetime(time.Minute * 5) // Use the connection pool to perform database operations // ... }Determining appropriate values for the

db.SetMaxOpenConns(),db.SetMaxIdleConns(), anddb.SetConnMaxLifetime()methods depends on various factors such as:- The expected traffic and workload on the database.

- The number of concurrent connections that is expected.

- The capacity of the database server.

- The average response time of the database queries.

- The expected load and performance of other applications that use the same database.

In general, a good starting point for setting these values would be:

db.SetMaxOpenConns()should be set to a value that is less than or equal to the maximum number of connections that the database server can handle. This value should be set high enough to handle expected traffic and workload but low enough to avoid overwhelming the database server. A value between 5 to 50 is often reasonable for most web applications.db.SetMaxIdleConns()should be set to a value that is sufficient to handle expected idle connections. This value should be set higher if the application frequently opens and closes connections. A value between 2 to 10 is often reasonable.db.SetConnMaxLifetime()should be set to a value that is greater than the average time of the database queries. This value should be set high enough to avoid frequent connection churn but low enough to prevent long-lived idle connections from consuming database resources unnecessarily. A value of a few minutes to a few hours is often reasonable.

It’s important to note that these values should be periodically reviewed and adjusted based on changing workload and performance requirements. Monitoring the database server and the application’s performance metrics can help to identify if these values need to be adjusted.

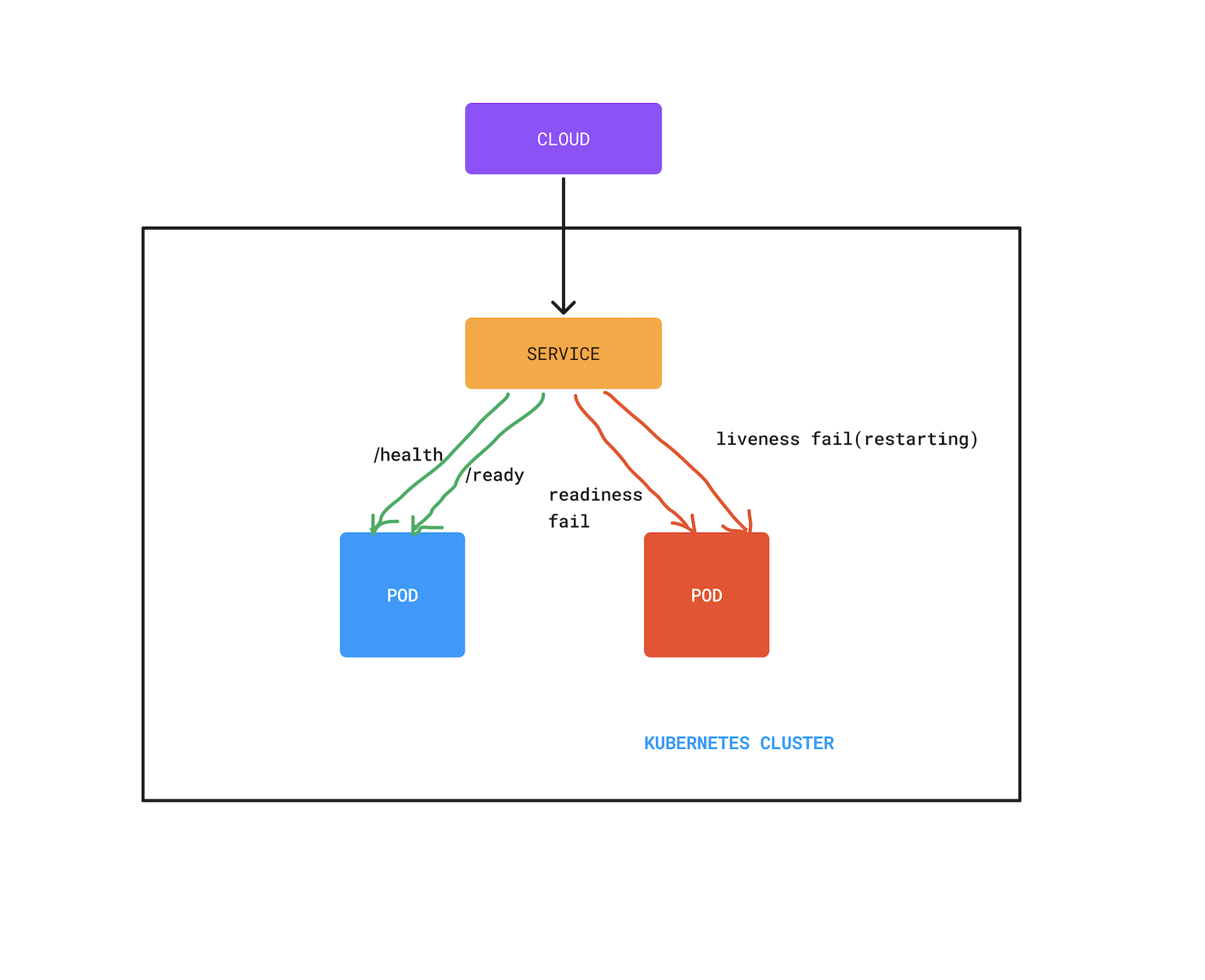

Utilize Health Checks and Readiness Probes

Kubernetes provides health checks and readiness probes that allow you to monitor the status of your containers and determine their readiness to receive traffic. By utilizing these features, you can ensure that only healthy and ready containers receive traffic, reducing server load caused by unhealthy or unready containers. You can implement custom health checks and readiness probes in your Golang application to provide accurate information about its status.

To monitor the status of your containers, let’s start by adding a readiness probe:

....

....

containers:

....

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 10

periodSeconds: 5readinessProbe defines a readiness probe for the container. Readiness probes are used to check if a container is ready to accept traffic.

httpGet specifies that the readiness probe should use an HTTP GET request to check the health of the container.

path: /health indicates the path to be used for the HTTP GET request to check the health of the container. In this case, it is set to “/health”.

initialDelaySeconds: 10 specifies the number of seconds to wait before starting the readiness probe after the container starts. In this case, it is set to 10 seconds.

periodSeconds: 5 specifies the interval in seconds between consecutive readiness probe checks. In this case, it is set to 5 seconds.

Now we can add a liveness probe immediately after the readiness probe:

....

....

containers:

....

readinessProbe:

.....

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

periodSeconds: 10livenessProbe defines a liveness probe for the container. Liveness probes are used to check if a container is running and healthy.

httpGet specifies that the liveness probe should use an HTTP GET request to check the health of the container.

path: /health indicates the path to be used for the HTTP GET request to check the health of the container. In this case, it is set to “/health”.

initialDelaySeconds: 10 specifies the number of seconds to wait before starting the liveness probe after the container starts. In this case, it is set to 10 seconds.

periodSeconds: 5 specifies the interval in seconds between consecutive liveness probe checks. In this case, it is set to 5 seconds.

The YAML file should look like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-golang-app

spec:

replicas: 3

selector:

matchLabels:

app: my-golang-app

template:

metadata:

labels:

app: my-golang-app

spec:

containers:

- name: my-golang-container

image: my-golang-image

ports:

- containerPort: 8080

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 10

periodSeconds: 5

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

periodSeconds: 10Optimizing Golang applications for Kubernetes requires careful consideration of resource allocation, garbage collection settings, network communication, and container health. By following best practices such as using minimal and efficient base images, optimizing resource allocations, using connection pooling, and implementing health checks and readiness probes, you can reduce server load and improve the performance of your Golang applications in Kubernetes. By leveraging the power of Kubernetes and Golang, you can build scalable and high-performance containerized applications that are optimized for modern cloud environments.

Techniques for Reducing Server Load

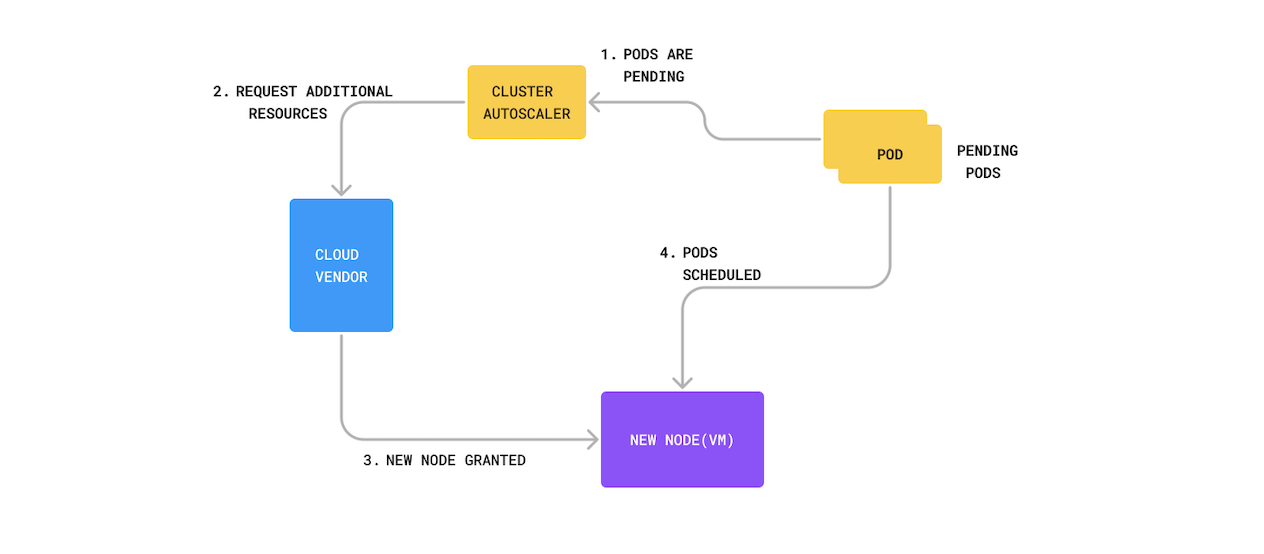

Implementing Auto-scaling: Auto-scaling allows automatically adjusting the number of pods based on resource utilization. This can be achieved using Kubernetes Horizontal Pod Autoscaler (HPA), which automatically scales the number of pods up or down based on CPU or memory utilization.

As shown in the diagram, the cluster autoscaler automatically provisions new nodes to handle the increased load in a Kubernetes cluster, ensuring efficient resource utilization and preventing server overload.

Load Balancing with Kubernetes: Kubernetes provides built-in mechanisms for load balancing, such as services and ingress. Services expose pods to the network and distribute incoming traffic across multiple pods using load-balancing algorithms such as round-robin or IP hash.

Caching and Utilizing Kubernetes Caching Systems: Kubernetes provides caching systems such as Memcached and Redis that can be deployed as pods and used to store frequently accessed data, reducing the need to query backend services repeatedly and reducing server load.

Conclusion

Congratulations! You’ve now moved closer to mastering optimization of Golang applications in Kubernetes.

Optimizing Golang applications for Kubernetes is essential for efficient and reliable deployment. Key practices include minimizing container size, optimizing resource allocation and garbage collection, implementing health checks and readiness probes, and leveraging Kubernetes for scaling and load balancing. These strategies reduce server load, boosting performance and cost efficiency.

If you’re looking for reliable, reproducible builds for your Golang apps, you might want to give Earthly a spin. It’s a tool that can further enhance your development process, ensuring consistency across different environments.

Earthly makes CI/CD super simple

Fast, repeatable CI/CD with an instantly familiar syntax – like Dockerfile and Makefile had a baby.