Golang SQLite database/sql

We’re Earthly. We make building software simpler and therefore faster. Earthly is open-source and written in go. So if you’re interested in a simpler way to build then check us out.

Welcome back. I’m an experienced developer, learning Golang by building an activity tracker. Last time I made a command-line client to connect to the JSON Service, but today is all about database persistence using database/sql.

If you’re curious about the basics of storing persistent data into a SQL database using Golang, this tutorial will be helpful for you. I’m going to be using sqlite3, but I’ll add lots of headings, so you can skip ahead if sqlite is not your thing.

My plan is to add SQLite persistence to the backend service so that my workouts aren’t lost if the service goes down. And once I have that, I’ll add the --list command to my command line client and add an end point for it. it’s the type of feature that is simple to do with a SQL backend.

Install SQLite

The first thing I need is to set up my dev environment. I need to install SQLite3 and SQLite-utils:

brew install sqlite3

brew install sqlite-utilsFun Tool: sqlite-utils

sqlite-utils is a handy tool for working with SQLite databases at the command line. It makes it simple to query for results or insert records from your terminal.

In particular sqlite-utils is good at is creating a database schema based on a CSV or JSON schema. So if I start up the service and get a sample JSON doc:

> curl -X GET -s localhost:8080 -d '{"id": 1}' | jq '.activity'

{

"time": "2021-12-09T16:34:04Z",

"description": "bike class",

"id": 1

}Then I can use sqlite-utils to create a database and table based on this JSON document’s structure:

$ curl -X GET -s localhost:8080 -d '{"id": 1}' | \

jq '.activity' | \

sqlite-utils insert activities.db activities - That gives me a good starting point for creating my table – I can never remember the CREATE TABLE syntax – and I can use sqlite-utils to return the schema it created:

> sqlite-utils schema activities.dbCREATE TABLE [activities] (

[id] INTEGER

[time] TEXT,

[description] TEXT,

);I’m going to create this sqlite3 schema manually, but it’s helpful to know you can use a tool to create a schema for you.

SQLite3 Create Database

SQLite databases are stored in files with the .db extension. I can create one with the schema I want using the sqlite3 command line tool like this:

sqlite3 activities.dbSQLite version 3.32.3 2020-06-18 14:16:19

Enter ".help" for usage hints.

sqlite> sqlite> CREATE TABLE [activities] (

...> id INTEGER NOT NULL PRIMARY KEY,

...> time DATETIME NOT NULL,

...> description TEXT

...> );SQLite, Data Types, and database/sql

You may notice that I’m storing time as DATETIME whereas sqlite-utils suggested TEXT for that column. SQLite is an amazing database but it has an unusual stance on types: it doesn’t really care about static types. Richard Hipp, the creator, doesn’t even like the term static types. He prefers to call them rigid types ( which he thinks are often a mistake.1)

Because of this stance, there is no statically verified TIME or DATETIME type in SQLite. Only INTEGER, REAL, TEXT, and BLOB. If you set the type as DATETIME, you can insert anything you want into it because it’s stored as TEXT on disk:

sqlite> insert into activities values

...> (NULL,"not a date","christmas eve bike class");

sqlite> select * from activities;

1|not a date|bike classWhy then am I using DATETIME? Well, It’s helpful to document the type of the field, and also, I’m going to be using database/sql in my service, and its scan function may use the column types when converting row values.

Populating the SQLite Database

I’m going to add some sample data to the database.

sqlite> insert into activities values

(NULL,"2021-12-09T16:34:04Z","christmas eve bike class");

sqlite> insert into activities values

(NULL,"2021-12-09T16:56:12Z","cross country skiing is horrible and cold");

sqlite> insert into activities values

(NULL,"2021-12-09T16:56:23Z","sledding with nephew");I can see the data like this:

sqlite> select * from activities;

1|2021-12-09T16:34:04Z|christmas eve bike class

2|2021-12-09T16:56:12Z|cross country skiing is horrible and cold

3|2021-12-09T16:56:23Z|sledding with nephewCommands in sqlite3 start with a dot . so I exit like this:

sqlite> .exitBy the way, I rarely select data using the sqlite3 client. Instead, I like to use sqlite-utils which has a nice table output view:

sqlite-utils activities.db "select * from activities" --table id time description

---- -------------------- -----------------------------------------

1 2021-12-09T16:34:04Z christmas eve bike class

2 2021-12-09T16:56:12Z cross country skiing is horrible and cold

3 2021-12-09T16:56:23Z sledding with nephewYou can also set .mode box in your .sqliterc to get a nicer output out of sqlite3.

sqlite-utils also has a dump command, which is helpful if I want a text backup of my database contents to version control.

> sqlite-utils dump activities.dbBEGIN TRANSACTION;

CREATE TABLE [activities] (

id INTEGER NOT NULL PRIMARY KEY,

time TEXT,

description TEXT

);

INSERT INTO "activities" VALUES(1,'2021-12-09T16:34:04Z','christmas eve bike class');

INSERT INTO "activities" VALUES(2,'2021-12-09T16:56:12Z','cross country skiing is horrible and cold');

INSERT INTO "activities" VALUES(3,'2021-12-09T16:56:23Z','sledding with nephew');

COMMIT;With my database in place, I can now start in on changes to the service.

Golang SQLite Setup

To use sqlite3 from Golang, I need a database driver. I’m going to use go-sqlite3 which I can install like this:

go get github.com/mattn/go-sqlite3Installing go-sqlite3 requires gcc and CGO_ENABLED=1

Finally, let’s jump into the Golang code.

Earthly makes CI/CD super simple

Fast, repeatable CI/CD with an instantly familiar syntax – like Dockerfile and Makefile had a baby.

Golang SQL Repository

Previously server.Activities contained a slice of api.Activity. Now I’m going to update it to contain a pointer to a sql.DB. This will be my database handle. It will be how I store and retrieve the activity records. Here is a diff:

type Activities struct {

mu sync.Mutex

- activities []api.Activity

+ db *sql.DB

}sql.DB is not SQLite-specific. It can represent a connection to any relational database with a driver. Let’s connect to it.

Database Connection Open

For this round I’m going to hard code my file path:

const file string = "activities.db"I can then initialize my db handle using open:

db, err := sql.Open("sqlite3", file)If I run this, I get this helpful error message:

sql: unknown driver "sqlite3" (forgotten import?)database/sql doesn’t know about github.com/mattn/go-sqlite3 and it’s helpfully telling me that I probably need to import it.

import (

"database/sql"

"errors"

"log"

"sync"

api "github.com/adamgordonbell/cloudservices/activity-log"

+ _ "github.com/mattn/go-sqlite3"

)After doing that, things seem to work.

database/sql Drivers

It seems a bit magical for an import to change execution, but the reason is that db.Open looks into a map of drivers (drivers[driverName]) for the driver matching sqlite3. And sqlite3 gets in that map via the initialization of github.com/mattn/go-sqlite3. Also, the error message told me precisely what to do, which was nice.

Initialize Database and Setup Schema

I want my service to bootstrap the database itself if one doesn’t exist. After all, one of the nice things about SQLite is how quickly you can create a database. For now, I’m going to initialize things by using a CREATE TABLE IF NOT EXISTS statement. This way, I can keep my existing data if I have it, but I can recreate things if I don’t.

const create string = `

CREATE TABLE IF NOT EXISTS activities (

id INTEGER NOT NULL PRIMARY KEY,

time DATETIME NOT NULL,

description TEXT

);`I’ll execute that with db.Exec, meaning my whole database handle constructor looks like this:

func NewActivities() (*Activities, error) {

db, err := sql.Open("sqlite3", file)

if err != nil {

return nil, err

}

if _, err := db.Exec(create); err != nil {

return nil, err

}

return &Activities{

db: db,

}, nil

}Golang Insert Into Database

I can now use my sql.db handle to insert data and get back the primary-key. The most concise way to do this is using db.Exec like this:

func (c *Activities) Insert(activity api.Activity) (int, error) {

res, err := c.db.Exec("INSERT INTO activities VALUES(NULL,?,?);", activity.Time, activity.Description)

if err != nil {

return 0, err

}

var id int64

if id, err = res.LastInsertId(); err != nil {

return 0, err

}

return int(id), nil

}And I can test my insert code with curl:

curl -X POST -s localhost:8080 -d \

'{"activity": {"description": "christmas eve bike class", "time":"2021-12-09T16:34:04Z"}}'{"id":5}A quick check with sqlite-utils shows that my results were written to the db:

> sqlite-utils activities.db "select * from activities" --table id time description

---- ------------------------- -----------------------------------------

1 2021-12-09 16:34:04+00:00 christmas eve bike class

2 2021-12-09 16:56:12+00:00 cross country skiing is horrible and cold

3 2021-12-09 16:56:23+00:00 sledding with nephew

4 not a date christmas eve bike class

5 2021-12-09 16:34:04+00:00 christmas eve bike classGolang Select Row.Scan

Now that I can insert data into the database, its time to get some data back out.

I can use sql.DB.query for my retrieval by id:

row, err := c.db.Query("SELECT * FROM activities WHERE id=?", id)

if err != nil {

return nil, err

}Query, but there is a better way.

But doing this gives me back sql.Rows, a cursor that points to possibly many rows.

Using it, I’ll have to handle the possibility of multiple rows coming back — since that is impossible with my primary key based query I’d probably just assume I always get a single row back.

Thankfully, I can use sql.QueryRow, which does just this:

QueryRow executes a query that is expected to return at most one row. QueryRow always returns a non-nil value. Errors are deferred until Row’s Scan method is called. if the query selects no rows, the Row’s Scan will return ErrNoRows. Otherwise, theRow’s Scan scans the first selected row and discards the rest.

My usage looks like this:

row := c.db.QueryRow("SELECT id, time, description FROM activities WHERE id=?", id)To convert the database values into my struct api.Activity I used row.Scan ( or row.Scan for multiple rows). It copies columns from the row into the value pointed at by each of its arguments.

With that in place, my full SQL select to struct code looks like this:

func (c *Activities) Retrieve(id int) (api.Activity, error) {

log.Printf("Getting %d", id)

// Query DB row based on ID

row := c.db.QueryRow("SELECT id, time, description FROM activities WHERE id=?", id)

// Parse row into Activity struct

activity := api.Activity{}

var err error

if err = row.Scan(&activity.ID, &activity.Time, &activity.Description); err == sql.ErrNoRows {

log.Printf("Id not found")

return api.Activity{}, ErrIDNotFound

}

return activity, err

}Understanding Scan

rows.Scan has no problem handling cases where the column value is an integer and destination value is also an integer – it just copies the row value into the pointed at destination value.

But how can it convert the string returned by SQLite into a time.Time? After all 01/12/2022 means different things depending on whether you expect DD/MM/YYYY or MM/DD/YYY, and SQLite stores these dates as strings on disk.

It turns out that Scan handles more complex types by implementing the scanner interface, which looks like this:

type Scanner interface {

Scan(src interface{}) error

}However, time values come in from the driver as time.Time and get mapped to other values using convertAssign like this:

case time.Time:

switch d := dest.(type) {

case *time.Time:

*d = s

return nil

case *string:

*d = s.Format(time.RFC3339Nano)

return nil

case *[]byte:

if d == nil {

return errNilPtr

}

*d = []byte(s.Format(time.RFC3339Nano))

return nil

case *RawBytes:

if d == nil {

return errNilPtr

}

*d = s.AppendFormat((*d)[:0], time.RFC3339Nano)

return nil

}So if SQLite is storing dates and time as strings and database/sql is getting them as time.Time then where is the conversion taking place? And this does matter – if I import data from another source, I want to make sure it will get converted correctly.

Well, after a little digging into go-sqlite3 and I found this:

// SQLiteTimestampFormats is timestamp formats understood by both this module

// and SQLite. The first format in the slice will be used when saving time

// values into the database. When parsing a string from a timestamp or datetime

// column, the formats are tried in order.

var SQLiteTimestampFormats = []string{

// By default, store timestamps with whatever timezone they come with.

// When parsed, they will be returned with the same timezone.

"2006-01-02 15:04:05.999999999-07:00",

"2006-01-02T15:04:05.999999999-07:00",

"2006-01-02 15:04:05.999999999",

"2006-01-02T15:04:05.999999999",

"2006-01-02 15:04:05",

"2006-01-02T15:04:05",

"2006-01-02 15:04",

"2006-01-02T15:04",

"2006-01-02",

}So, that list of priority order formats drives the conversion process.

As long as my dates strings are in one of these formats, they will get correctly converted when I read them out. And when I’m inserting records, the first format in the list will be used to transform my time.Time to a database string.

Retrieving Many Rows With rows.Scan

Now I can add my -list endpoint. It follows a similar pattern as Retrieve (-get) but using db.Query:

func (c *Activities) List(offset int) ([]api.Activity, error) {

rows, err := c.db.Query("SELECT * FROM activities WHERE ID > ? ORDER BY id DESC LIMIT 100", offset)

if err != nil {

return nil, err

}

defer rows.Close()

data := []api.Activity{}

for rows.Next() {

i := api.Activity{}

err = rows.Scan(&i.ID, &i.Time, &i.Description)

if err != nil {

return nil, err

}

data = append(data, i)

}

return data, nil

}Prepared Statements

It takes time for SQLite to parse the strings of SQL I’m sending it. And since the SQL never changes, using prepared statements is a great option to consider.

To do so, I would need to add them to my Activities struct and initialize them with the database handle init code.

type Activities struct {

db *sql.DB

mu sync.Mutex

+ insert, retrieve, list *sql.Stmt

}

func NewActivities() (*Activities, error) {

db, err := sql.Open("sqlite3", file)

if err != nil {

return nil, err

}

if _, err := db.Exec(create); err != nil {

return nil, err

}

+ insert, err := db.Prepare("INSERT INTO activities VALUES(NULL,?,?);")

+ if err != nil {

+ return nil, err

+ }

...

return &Activities{

db: db,

+ insert: insert,

+ retrieve: retrieve,

+ list: list,

}, nil

}And then I could use these statements to perform any SQL work, saving the parsing:

res, err := insStmt.Exec(activity.Time, activity.Description)

if err != nil {

return 0, err

}I’d also have to remember to close these statements when my db handle was closed.

This seems like overkill for my activity tracker, so I’ll avoid this for now. But I did find this example helpful for understanding what using prepared statements would look like.

Testing List

With that list method threaded through to /list I can start pulling out lists of items using curl:

> curl -X GET -s localhost:8080/list -d '{"offset": 1}' | jq .[

{

"time": "2022-01-09T16:56:23Z",

"description": "sledding with nephew",

"id": 3

},

{

"time": "2022-01-02T16:56:12Z",

"description": "cross country skiing",

"id": 2

}

]And then calling it with my command-line client:

go run cmd/client/main.go --listID:3 "sledding with nephew" . 2022-01-9

ID:2 "cross country skiing " 2022-01-2

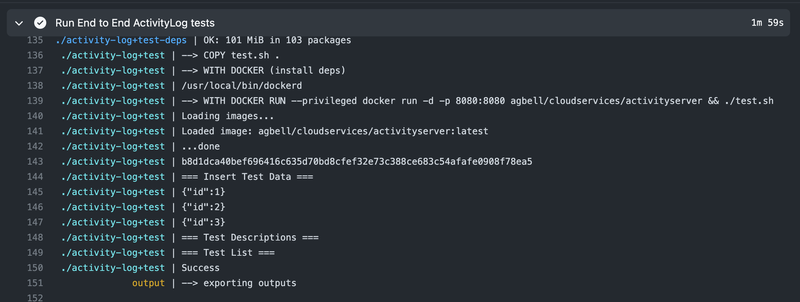

ID:1 "christmas eve bike class" 2021-12-24And I can also use Earthly to test my CI integration tests:

> earthly -P +test

+build | --> RUN go build -o build/activityserver cmd/server/main.go

+build | # github.com/mattn/go-sqlite3

+build | cgo: exec gcc: exec: "gcc": executable file not found in $PATHOh yeah, the SQLite driver! Our driver needs GCC to build and since our builds are running in a container, for repeatability, we need to add GCC to our build script.2

VERSION 0.6

FROM golang:1.15-alpine3.13

WORKDIR /activityserver

+ # Install gcc compiler

+ RUN apk add build-base

deps:

COPY go.mod go.sum ./

RUN go mod download

+ RUN go get github.com/mattn/go-sqlite3

SAVE ARTIFACT go.mod AS LOCAL go.mod

SAVE ARTIFACT go.sum AS LOCAL go.sum

build:

FROM +deps

COPY . .

RUN go build -o build/activityserver cmd/server/main.go

SAVE ARTIFACT build/activityserver /activityserver

...With these changes, my previously written containerized integration now tests from network request to the filesystem, including creating a new database.

Here it is in GitHub Actions:

Now my activity service has a persistence layer, and I learned quite a bit about how database/sql, sqlite3, sqlite-utils and github.com/mattn/go-sqlite3 work. Thank you for coming along on the journey with me. I didn’t show all the code changes here, but you can find the diff and the full code on GitHub.

What’s Next

Next, I’m planning to explore gRPC and protocol buffers, along with considering richer records and reporting options. Also if you’re building with Golang, consider giving Earthly a whirl for consistent and efficient builds.

And if you want to be notified about the next installment, sign up for the newsletter:

<div>

<div class="text-3xl font-bold tracking-tight text-black/80">

Get notified about new articles!

</div>

<div class="mb-4 opacity-80 mt-1">

We won't send you spam. Unsubscribe at any time.

</div>

</div>

<script src="https://f.convertkit.com/ckjs/ck.5.js"></script>

<div class="block rounded-lg shadow-lg bg-white px-6 py-4">

<div class="newsletter text-2xl font-bold mb-1">Subscribe to the Newsletter</div>

<form id="embedded-newsletter-form" action="https://app.convertkit.com/forms/2810221/subscriptions" markdown="0"

class="bg-white" method="post" data-sv-form="2810221" data-uid="c350589119" data-format="inline"

data-version="5"

data-options='{"settings":{"after_subscribe":{"action":"message","success_message":"Success! Now check your email to confirm your subscription.","redirect_url":""},"analytics":{"google":null,"facebook":null,"segment":null,"pinterest":null,"sparkloop":null,"googletagmanager":null},"modal":{"trigger":"timer","scroll_percentage":null,"timer":5,"devices":"all","show_once_every":15},"powered_by":{"show":true,"url":"https://convertkit.com/features/forms?utm_campaign=poweredby&utm_content=form&utm_medium=referral&utm_source=dynamic"},"recaptcha":{"enabled":false},"return_visitor":{"action":"show","custom_content":""},"slide_in":{"display_in":"bottom_right","trigger":"timer","scroll_percentage":null,"timer":5,"devices":"all","show_once_every":15},"sticky_bar":{"display_in":"top","trigger":"timer","scroll_percentage":null,"timer":5,"devices":"all","show_once_every":15}},"version":"5"}'

min-width="400 500 600 700 800">

<div class="formkit-background" style="opacity: 0.2"></div>

<div data-style="minimal">

<div data-element="fields" data-stacked="false" class="seva-fields formkit-fields">

<div class="formkit-field">

<input id="embedded-newsletter-form-email" class="border rounded-md p-2 mb-2 w-3/4" name="email_address" aria-label="Email Address"

placeholder="Email Address" required="" type="email" />

</div>

<button data-element="submit" class="formkit-submit formkit-submit w-44 pt-1 pb-1" style="

color: rgb(255, 255, 255);

background-color: rgb(22, 119, 190);

border-radius: 4px;

font-weight: 400;

">

<div class="formkit-spinner">

<div></div>

<div></div>

<div></div>

</div>

<span class="">Subscribe</span>

</button>

</div>

<div class="formkit-guarantee" data-element="guarantee">

</div>

</div>

<style></style>

</form>

</div>

<script>

const form = document.getElementById('embedded-newsletter-form');

form.addEventListener('submit', function (event) {

var formEmail = document.getElementById('embedded-newsletter-form-email').value;

analytics.identify(formEmail);

analytics.track('embedded-newsletter-form', {

category: 'Form Submission',

label: 'embedded-newsletter-form'

});

});

</script>bbkane_pointed out to me that SQlite now has a STRICT mode. It doesn’t support DateTime so far, but perhaps it one day will.↩︎There is a machine translated pure Go SQLITE implementation that saves you from needing GCC, although it is slower and probably less extensively tested. Thanks again

bbkane_↩︎